welcome to The Prompter #004!

this is a weekly newsletter written by krea.ai for dreamers, AI whisperers and prompters.

📰 AI news

Dream Studio

the phase II of the Stable Diffusion beta begun with Dream Studio, a new suite of generative AI tools released by StabilityAI.

Emad (CEO at StabilityAI) stated that this tool was not meant to be a consumer product but rather a funnel that enables anyone test and play the features developed by his company, even though the tool comes some interesting features.

the current release comes with same settings tat we had in the Stable Diffusion discord but with a user interface that makes it easier to tune them, and with a gallery that allows us to see what we have created, which is great.

we can expect to be able to create animations with this tool pretty soon, as well as node-based interfaces that combine different functinoalities, and even the integration of others famous AI text-to-image models, such as VQGAN or Disco Diffusion.

the pricing of the tool had good reception from the community, with $10 we’ll be able to generate around 1,000 images, so ~1 cent per generation.

here’s a great guide created by @MrH3RB about this tool.

musika

this is one of the coolest works we’ve seen for music generation.

the recent paper by @marco_ppasini presents a generative AI model that can produce music much faster than real-time and USING A CONSUMER CPU!!

the model can also be trained with a consumer GPU, and the code and weights will be available soon their github repo.

for now, we can enjoy some amazing AI generate techno or AI pianos in this huggingface spaces repo.

🛠️ tools for prompting

tool to preview animations

generating interesting animations with diffusion models is a tedious task; it requires of lots of setting to be tuned manually for each portion of the animation and it’s very hard to know how the result would look like until it is generated (which can take hours in some cases).

@pharmapsychotic recently share an awesome tool to help out anyone who plans to create animations with Disco Diffusion.

this project runs on Google Colab and contains the same animation parameters as in Disco Diffusion

Artbreeder collage tool

the genius team at @Artbreeder is working on integrating their collage tool with Stable Diffusion.

this tool enables artists to create a collage of simple shapes and enhance them with a text-to-image model.

the possibility of using an initial image gives a lot of controlability for determining where the elements from our prompt should appear.

inpainting in Disco Diffusion

@cut_pow shared a Colab Notebook with an method to apply inpainting to Disco Diffusion.

the notebook allows to interactively create masks in the parts of the image that we want to inpaint, and it can be used in combination with all the possibilities that Disco Diffusion offers such as 2D or 3D animations.

prompt randomizer

this simple tool created by @kokuma is very handy for getting variations of our own prompts.

it allows us to re-order the modifiers we use in a prompt and explore how the ordering affects our final generations.

MidJourney styles and keywords references

apart from being one of the most epic github repositories we’ve seen, styles-and-keywords-reference site is an amazing resource for exploring and visualizing prompts in MidJourney.

The site even allows to see how the different models in MidJourney will comprehend and generate a certain word.

Understanding MidJourney (and SD) through teapots.

when exploring the possibilities of text-to-image models, it is usual to gather a concept or an idea and generate variations of it with different modifiers.

for example, in the Stable Diffusion Art Studies, they create all their generations from two concepts: “a portrait of a character in a scenic environment by [artist]” and “a building in a stunning landscape by [artist]”.

Rex Wang decided to use teapots for his ongoing studies with MidJourney and Stable Diffusion.

this is a great resource for getting prompt ideas and to visualize how each model behaves with different text modifiers.

🎨 AI Art

experimenting with initial images in Stable Diffusion

using initial images with Stable Diffusion provides us with visual means to communicate our ideas effectively with the AI.

the technique for using initial images is quite simple if we know the basics of how diffusion models generate images.

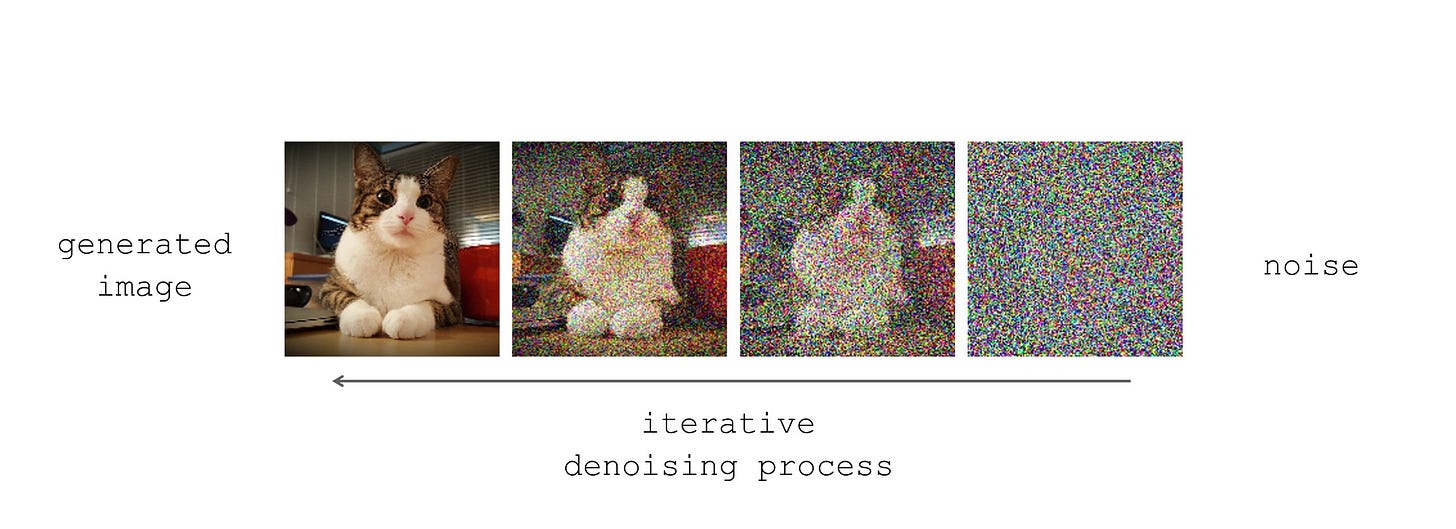

at a high level, the image synthesis in diffusion models consists of an iterative process of “denoising”—removing noise from an image.

starting from an image that is complete noise, diffusion models are capable of generating a hyper realistic image after running this denoising process over and over.

the following image depicts this denoising process, note how the model is capable of turning a noise image into a realistic cat.

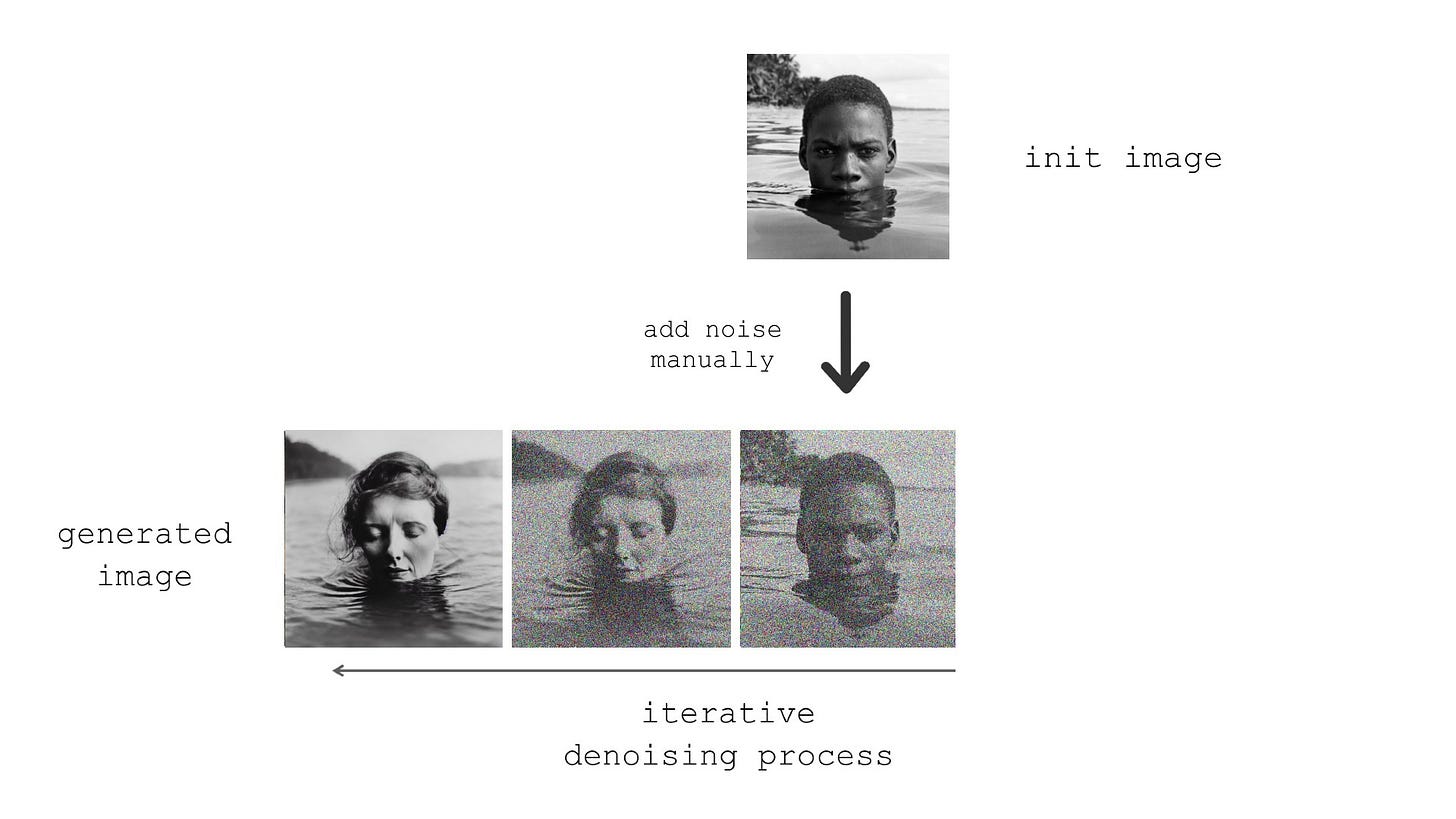

generating with initial images simply consists of adding noise manually to an image and use it as starting point to run the iterative diffusion process.

this way, we will just run a portion of the denoising process which will allow us to maintain a lot of the content and style from the initial image.

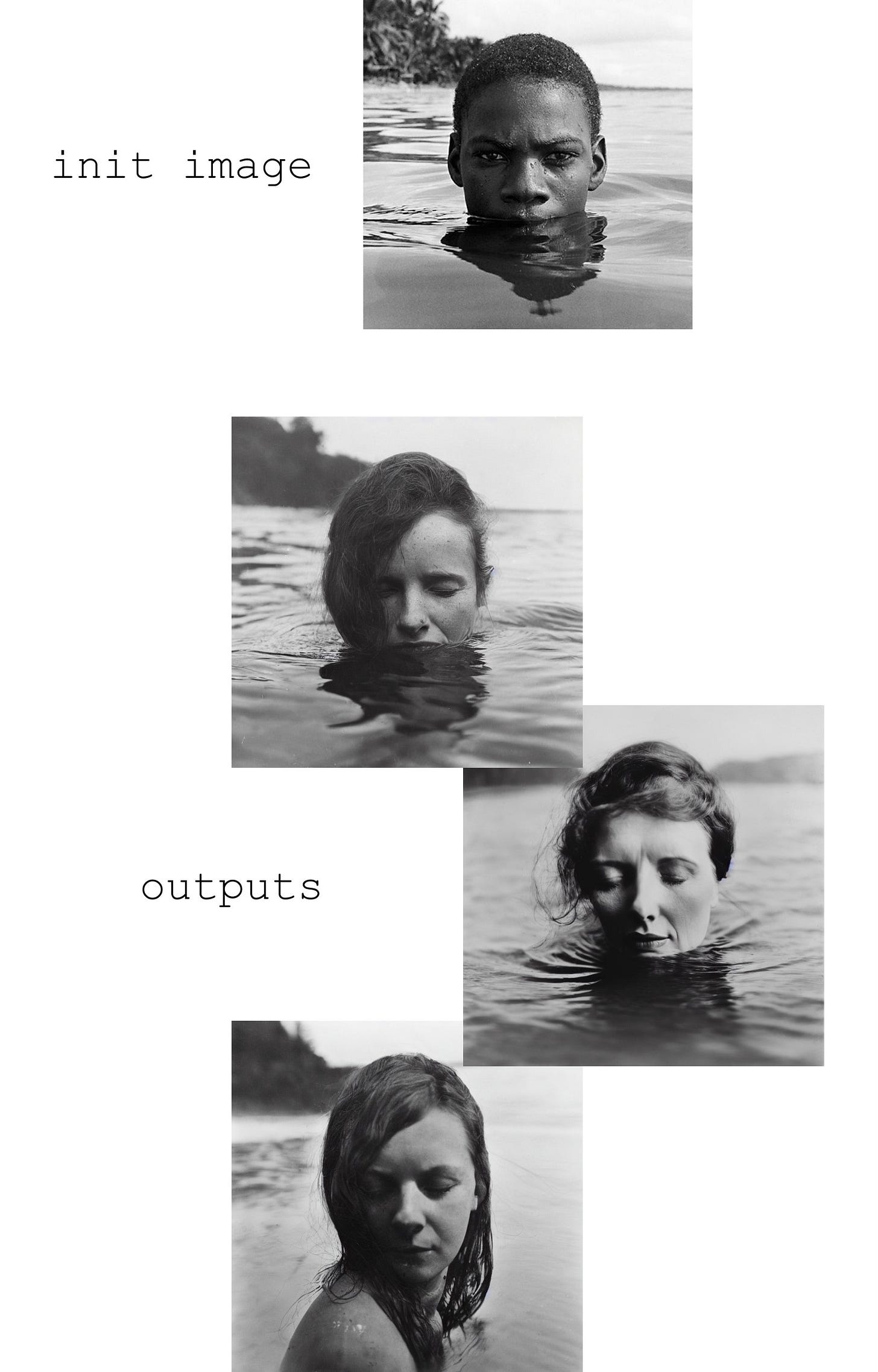

here are some examples of this technique.

the credit for the previous generations goes to @blessedbck, who has been doing an intense exploration about the use of init images with Stable Diffusion.

here’s a post from @EErratica where the same technique is applied for turning sketches into illustrations full of detail.

this is just a taste of how AI models can speed up by 10x creative processes, we’ve seen nothing yet!

🦾 krea updates

release of open prompts

open prompts is an open knowledge base of prompt modifiers.

anyone can contribute with their ideas, and all the results are accessible for free at krea.ai.

large dataset of stable diffusion generations

we have more than 700k unique generations made with Stable Diffusion.

all this generations are searchable through krea.ai for free, you can use our website to get inspiration and to explore what others have created with this model.

new UI features

enable/disable modifiers.

visualize modifiers used for each generation.

soon

integration of other AI models into krea.ai.

new UI features optimized for inspiration.

modifier recommendations.

we’d love to hear your thoughts, ideas, and feedback, reach out!