The Prompter #002

07/31/22-08/07/22

welcome to The Prompter #002!

this is a weekly newsletter written by us (krea.ai) to keep you updated with the latest around AI and prompt engineering.

make sure to share your thoughts, ideas, and feedback with us.

it has been an intense week; new models, amazing papers, and new AI tools.

let’s get started!

this week at krea 🦾

we launched a new feature for organizing text prompts.

we also created a new UI design, ready to handle the image editing functionalities that we’ll implement this week.

a glimpse into the future 🔮

combining real-world images with your prompts

this work will able us to take pictures from the real world and use them in our generations.

the way how it works is by training a system capable of finding what they call “pseudo-prompts”, which are prompts optimized to represent the content or style of a reference image.

we can use pseudo-prompts as if they were regular text prompts. For example, if we found that S* is the perfect way to represent your cat, with the text “Banksy painting of S*” we’ll be able to generate a Banksy artwork of *literally* your cat 🙀

the model even works with inpainting!

s/o to the team behind this great work: Rinon Gal, Yuval Alaluf, Yuval Atzmon, Or Patashnik, Amit H. Bermano, Gal Chechik, and Daniel Cohen-Or.

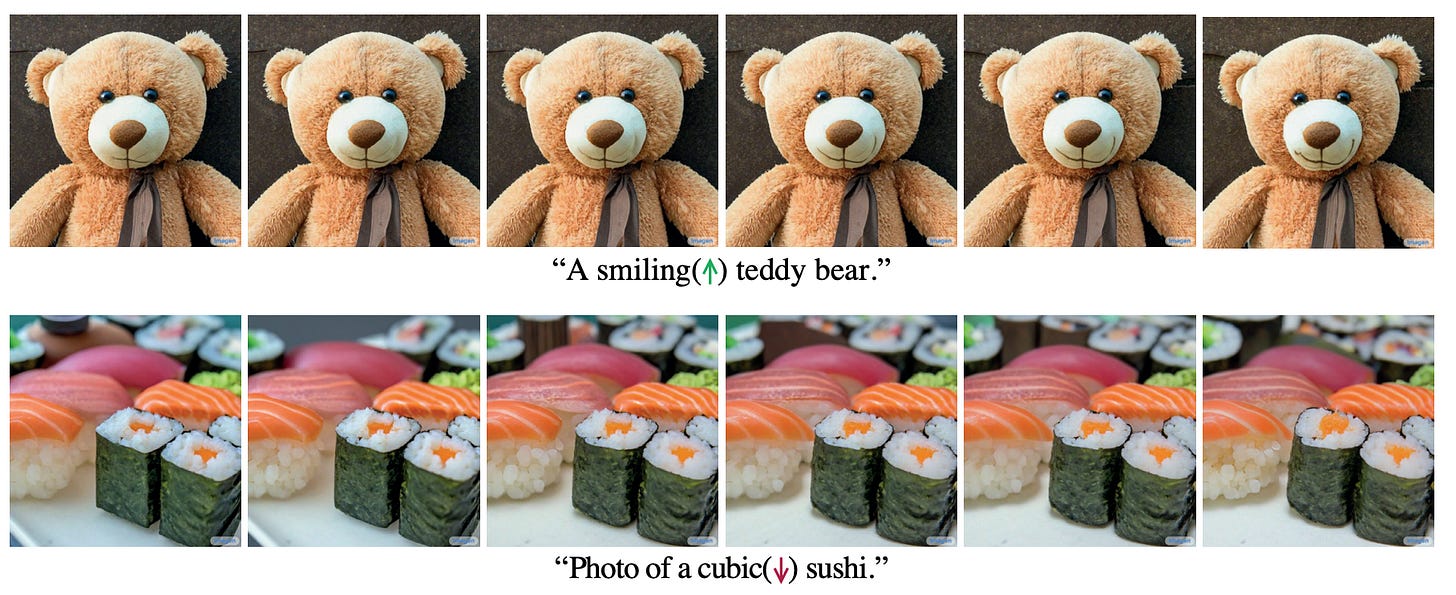

edit parts of an image with prompts

does your cat need a new look? no need to buy new clothes, just prompt some fresh styles!

this work presents a technique to edit specific elements from an image with just text prompts.

for their experiments, they used Imagen, an AI model from Google.

their idea can also be used to weigh the importance of certain parts of a text prompt, something key for future prompt-powered editing tools—like krea.ai!

s/o to the amazing team behind this work: Amir Hertz, Ron Mokady, Jay Tenenbaum, Kfir Aberman, Yael Pritch, and Daniel Cohen-Or.

stable diffusion is out! 💥

Emad announced the new discord bots to beta test stable diffusion.

Gene Kogan shared a prototype of a new interactive tool to use Sable Diffusion

Xander hacked stable diffusion to generate image interpolations

sexy colabs 👀

@deKxi shared a new Colab Notebook to create depth warped zooms with DALL·E 2

@PDillis shares his new repo to create image interpolations with the internal representations of StyleGAN3.

other tools, news, and resources 📰

@FabianMosele created an amazing and detailed interactive website to visualize the evolution of text-to-image AI

PITI-Synthesis demo on huggingface enables us to play with Image-to-Image Translation.

GLM 130-B, a massive English-to-Chinese language model just got released!

final thoughts 🤔

the release of stable diffusion is huge for the world of generative AI art.

on the one side, the dataset to train the model was not as filtered as DALL·E 2, so it knows a wider variety of aesthetics, famous people, and artists. While this comes with the downside of people using it to create deep fakes or sensitive content, it opens a new dimension of creative possibilities. Taking a quick look on twitter it’s easy to see how artistic people are able to get with this model.

on the other side, having full access to the code and AI models that Stability AI trained will enable builders like us to do way more than what we could have done with just an API. We’ll be able to create our own versions of the model specialized in different domains (such as paintings or 3D characters) and hack new applications, such as creating “pseudo prompts” to use real-world images in our generations.

we’ll be working hard to provide prompt engineers with everything they need to effectively use this technology, stay tuned!